What is Amazon Eventbridge pipes?

✓ It's a new feature released by AWS in recent re- invent'23.

✓ This service is available under AWS Eventbridge console.

✓ Eventbridge Pipes helps you connect your source and targets seemlessly without any integration code.

✓ When you are creating an event driven architecture, you must give a chance to eventbridge pipes in your architecture and see the magic.

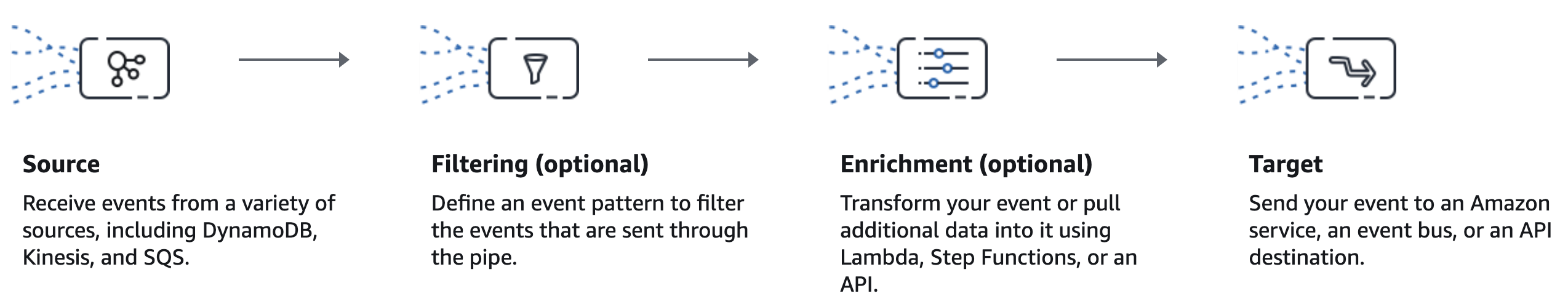

✓ You can set up pipe easily, by choosing your source, add filtering (optional), add additional enrichment step (optional) and add your target. That's all you are done!

How it works? What are the source and targets?

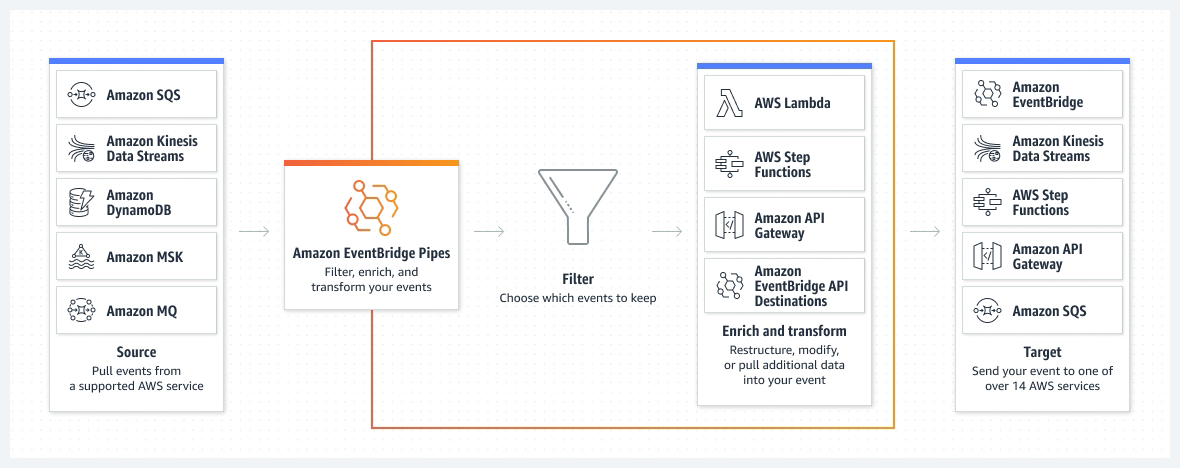

✓ You have Amazon AWS, Amazon Kinesis Data Streams, Amazon DynamoDB, Amazon MSK, Amazon MQ as source for pipes currently.

✓ Optionally, in your filtering step you can select or refine you input data and send to next step seemlessly.

✓ Additionally an enrichment step is available before you connect a target for your pipes. This enrichment step allow you to perform some additional manipulation, or data retrieve from other sources or you can even perform some other task with you input data before sending it to the target.

✓ To perform this enrichment step you can avail any of the available AWS service within pipes.

✓ Here comes the final step, targets. You can connect the pipes with available targets and all done.

When and where to use eventbridge pipes?

My Thoughts 1:

✓ You have AWS SQS connected with AWS Lambda, in this case you can introduce pipes in between SQS and Lambda and do any filtering to remove unwanted data flowing in your lambda, also you can reject the false payload or unstructed payload reaching your lambda function by adding filtering patterns in pipes. Thus saves some cost and some lines of code, time, performance in your lambda.

My Thoughts 2:

✓ You have an use case like you have data in DynamoDB and writing those data to redshift clusters using a glue jobs. Assume, In this glue job you are pulling data from DynamoDB and changing the columns names in the data and putting to redshift clusters. If you have these kind of use case, directly you can eliminate the glue job and introduce pipes instead of glue job, point your source DynamoDB and add filtering step to change the column name and connect your redshift clusters directly from pipes and see the magic and see your AWS bills going steep down, since you have replaced glue job with pipes option.

My Thoughts 3:

✓ You have an event based operations, like some data in your DynamoDB is updated with some items behinds the scenes you want validate and make sure this items updated or entered is right and correct. In this case connect your pipes with your source DynamoDB and add an enrichment step to validate the data updated in your DynamoDB is right, and if not right send those details to pipes targets where you can have a step function to send an email to the respective team or contact that recently updated entry in the source DynamoDB is wrong, please check and act.

Reference:

Tags:

#aws #architecture #eventbridge #eventbridgepipes #cloud

Have a Great Day

:)

Comments